Apple’s new FAQ document, entitled “Expanded Protections for Children” seeks to address concerns over user privacy following last week’s announcement of the new CSAM detection feature in iCloud Photos, as well as the new communication safety features in Messages.

“Since we announced these features, many stakeholders including privacy organizations and child safety organizations have expressed their support of this new solution, and some have reached out with questions,” reads the FAQ. “This document serves to address these questions and provide more clarity and transparency in the process.”

Apple attempts to clear up some misunderstanding over the new features, The FAQ attempts to differentiate the features, explaining that communication safety in Messages “only works on images sent or received in the Messages app for child accounts set up in Family Sharing,” while CSAM detection in iCloud Photos “only impacts users who have chosen to use iCloud Photos to store their photos… There is no impact to any other on-device data.”

These two features are not the same and do not use the same technology.

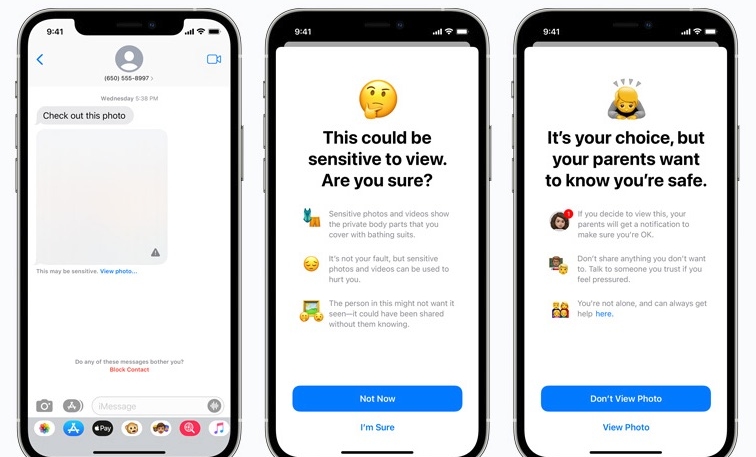

Communication safety in Messages is designed to give parents and children additional tools to help protect their children from sending and receiving sexually explicit images in the Messages app. It works only on images sent or received in the Messages app for child accounts set up in Family Sharing. It analyzes the images on-device, and so does not change the privacy assur- ances of Messages. When a child account sends or receives sexually explicit images, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view or send the photo. As an additional precaution, young children can also be told that, to make sure they are safe, their parents will get a message if they do view it.

The second feature, CSAM detection in iCloud Photos, is designed to keep CSAM off iCloud Photos without providing information to Apple about any photos other than those that match known CSAM images. CSAM images are illegal to possess in most countries, including the United States. This feature only impacts users who have chosen to use iCloud Photos to store their photos. It does not impact users who have not chosen to use iCloud Photos. There is no impact to any other on-device data. This feature does not apply to Messages.

The rest of the document is split into three sections that attempt to answer the following commonly asked questions:

Communication safety in Messages

- Who can use communication safety in Messages?

- Does this mean Messages will share information with Apple or law enforcement?

- Does this break end-to-end encryption in Messages?

- Does this feature prevent children in abusive homes from seeking help?

- Will parents be notified without children being warned and given a choice?

CSAM detection

- Does this mean Apple is going to scan all the photos stored on my iPhone?

- Will this download CSAM images to my iPhone to compare against my photos?

- Why is Apple doing this now?

Security for CSAM detection for iCloud Photos

- Can the CSAM detection system in iCloud Photos be used to detect things other than CSAM?

- Could governments force Apple to add non-CSAM images to the hash list?

- Can non-CSAM images be “injected” into the system to flag accounts for things other than CSAM?

- Will CSAM detection in iCloud Photos falsely flag innocent people to law enforcement?

Apple has faced heavy criticism from privacy advocates, security researchers, and others for its decision to include the new technology alongside the release of iOS 15 and iPadOS 15, expected in September.