If you watched Apple’s WWDC 2023 keynote address, you may have noticed that Apple’s new Apple Vision Pro “spatial computing” device does not have a physical mouse, joystick, keyboard, or any other physical control mechanism to control it. The new headset uses eye tracking and hand gestures to control objects in the virtual space.

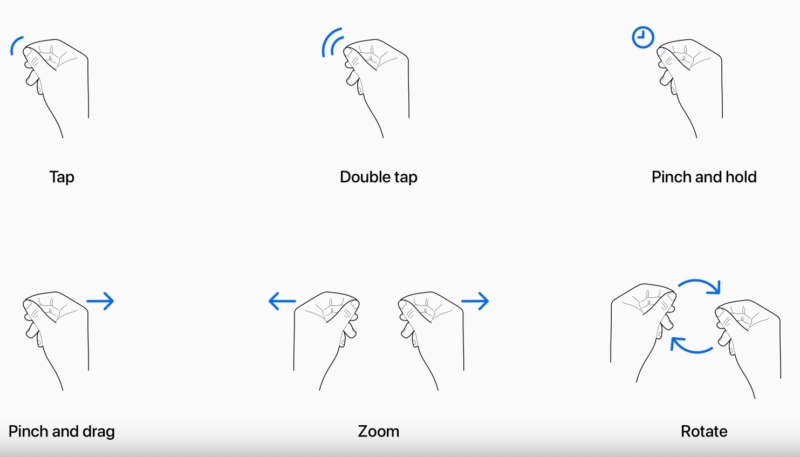

In a recent developer session, Apple told developers about the specific gestures that can be used to interact with Vision Pro:

- Tap – By tapping your thumb and index finger together you will signal the headset that you want to tap on a virtual element on the headset display that you’re looking at. Some users have dubbed this as a pinch. This is the virtual equivalent to tapping the screen of your iPhone.

- Double Tap – Tapping twice with a finger performs (logically enough) a double-tap gesture.

- Pinch and Hold – Performing a pinch and a hold gesture allows you to do things like highlighting on-screen text.

- Pinch and Drag – Pinch and drag to scroll and move around windows. Scrolling is both horizontal and vertical, and the faster you move your hand, the faster you’ll be able to scroll.

- Zoom – Zoom is a two-handed gesture, one of two. Pinch your fingers together and pull your hands apart to zoom in. Dragging a window by the corner allows window sizes to be adjusted.

- Rotate – Rotate is the second two-handed gesture and involves pinching the fingers together and rotating the hands. This allows you to manipulate virtual objects on the headset’s screen.

The numerous cameras in the Vision Pro will track your eye movements to detect where you’re looking with excellent accuracy. Eye position will help target what elements you want to interact with via your hand gestures. In other words, by looking at an app icon or on-screen element you can target it and highlight it, and then you can interact with it by using a gesture.

Hand gestures do not need to be grand (no “jazz hands” required), and Apple even encourages users to keep their hands in their lap, as it will keep your hands and arms from tiring from being held in the air. A tiny pinch gesture works as the equivalent of a tap, due to the cameras’ ability to track precise movements.

You can select and manipulate objects that are both close to you and far from you, as you can reach out and use your fingertips to interact with an object.

The headset also supports hand movements such as air typing, though those who have been able to demo a unit say they have not yet been able to try this feature out. The various gestures can be used together. For example, if you wish to create a drawing, you’ll be able to look at a spot on the virtual canvas, select a brush using your hand, and then use a gesture to draw in the air. By looking elsewhere, you’ll move the cursor automatically to the spot you’re looking at.

Developers can also create custom gestures for use in their apps to perform custom actions. The custom gestures will need to be completely distinct from the system’s built-in gestures or common hand movements that people might use. Developers are also told to ensure that any gestures they come up with can be repeated without hand strain.

In addition to using hand and eye gestures, users can also use Bluetooth keyboards, trackpads, mice, and game controllers with the headset. Voice-based search and dictation tools are also available.

Many that have been able to give the Vision Pro a try the Vision Pro have used the same word to describe the control system – “intuitive.” The gestures have been designed to work similarly to how multitouch gestures work on the iPhone and the iPad, and reactions from users have been positive.

(Via MacRumors)