An international coalition of more than 90 policy and rights groups has published an open letter to Apple to drop its plans to “build surveillance capabilities into iPhones, iPads, and other products.” The Cupertino firm recently announced its plans to scan iCloud photo libraries for child sexual abuse material (CSAM).

Via Reuters:

“Though these capabilities are intended to protect children and to reduce the spread of child sexual abuse material (CSAM), we are concerned that they will be used to censor protected speech, threaten the privacy and security of people around the world, and have disastrous consequences for many children,” the groups wrote in the letter.

Some groups that signed the letter (organized by the U.S.-based nonprofit Center for Democracy & Technology (CDT)), are concerned with the possible misuse by some governments to search for political and other sensitive content.

“Once this backdoor feature is built in, governments could compel Apple to extend notification to other accounts, and to detect images that are objectionable for reasons other than being sexually explicit,” reads the letter.

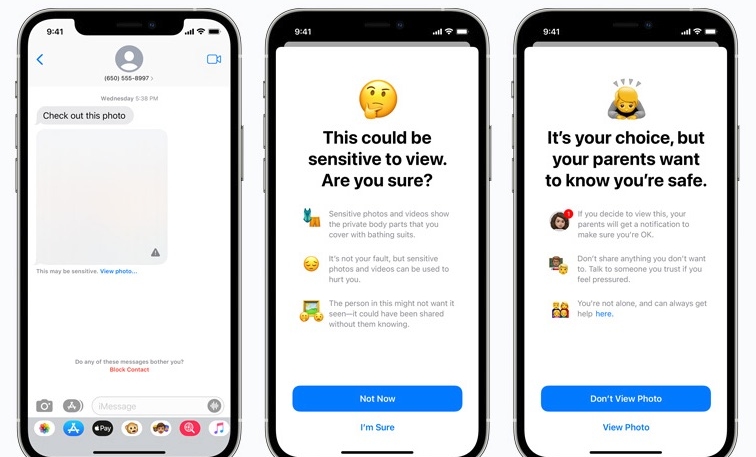

The coalition’s letter also calls out a change to iMessage in family accounts, which would try to identify and blur nudity in children’s messages, letting them view it only if parents are notified. Signatories say the move could endanger children in intolerant homes or those seeking educational material. They also say the change will break end-to-end encryption for iMessage, which it says Apple has staunchly defended in other contexts.

Signers are based in Brazil, India, Mexico, Germany, Argentina, Ghana, and Tanzania. Groups that have also signed include the American Civil Liberties Union, Electronic Frontier Foundation, Access Now, Privacy International, and the Tor Project.

Apple’s plan to detect known CSAM images stored in iCloud Photos has proven to be controversial and has raised concerns from security researchers, academics, privacy groups, and others about the system potentially being abused by governments as a form of mass surveillance.

The Cupertino firm has attempted to address these concerns with an FAQ page and additional documents explaining how the system will work, and how it will refuse demands to expand the image-detection system to include materials other than CSAM images. However, as noted by Reuters, Apple has not said that it would pull out of a market rather than obeying a court order.

Apple employees have also reportedly raised concerns internally over the company’s plan.